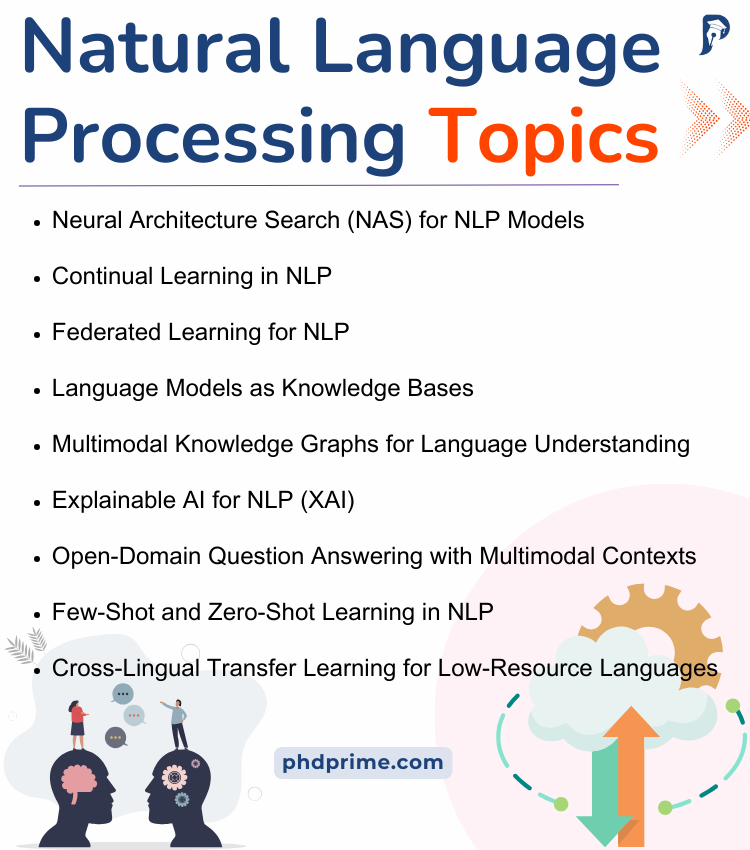

There are several topics that are progressing in the field of Natural Language Processing (NLP) in current years. Take a close look at the fascinating topics we have explained below, as there are numerous advancements happening in the field of Natural Language Processing. Entrust your implementation to our skilled developers and witness the magic performed by our experts. The following are few advanced topics that depict the most creative research regions in NLP:

- Neural Architecture Search (NAS) for NLP Models

Explanation:

- Through the utilization of NAS, computerize the model of neural network structures.

- To construct effective structures for certain NLP missions, it is appreciable to implement NAS.

Major Characteristics:

- For transformer frameworks, architecture search is effective.

- Typically, it offers multi-objective enhancement for framework size and effectiveness.

Research Queries:

- How can NAS enhance effectiveness when decreasing computational expenses in NLP frameworks?

- What trade-offs present among structure complication and framework effectiveness?

Tools and Approaches:

- NNI (Neural Network Intelligence), BERT, AutoGluon, OpenNAS.

- Continual Learning in NLP

Explanation:

- Continual learning systems have to be utilized in such a manner that are able to adjust to novel missions without failing to remember existing learned missions.

- To sustain efficiency among a sequence of NLP missions, aim to create an efficient framework.

Major Characteristics:

- For avoiding catastrophic forgetting, this study provides elastic weight consolidation (EWC).

- Normally, replay-related techniques are efficient to maintain expertise.

Research Queries:

- How can NLP frameworks be made more resilient to catastrophic forgetting?

- Which policies better assist in stabilizing flexibility and consistency?

Tools and Approaches:

- Transformers, EWC, PyTorch, GEM (Gradient Episodic Memory), TensorFlow.

- Federated Learning for NLP

Explanation:

- To train NLP frameworks on decentralized data resources without data exchange, focus on utilizing federated learning.

- It is appreciable to assure confidentiality when enhancing model effectiveness among different fields.

Major Characteristics:

- This study provides confidentiality-preserving model training.

- Cross-device and cross-silo federated learning are encompassed.

Research Queries:

- How can federated learning systems be adjusted to maintain various data disseminations?

- How to stabilize data heterogeneity and confidentiality in federated NLP?

Tools and Approaches:

- TensorFlow Federated, Hugging Face Transformers, Flower (FL framework), PySyft.

- Language Models as Knowledge Bases

Explanation:

- To perform as implicit knowledge bases, research the capability of extensive language systems.

- Aim to construct approaches to question frameworks for accurate information in a direct manner.

Major Characteristics:

- It offers implicit knowledge recovery from pre-trained language frameworks.

- Generally, fact verification and knowledge augmentation processes are encompassed.

Research Queries:

- How precise are language systems as implicit knowledge bases?

- What policies can enhance accurate precision in knowledge recovery?

Tools and Approaches:

- Knowledge Graphs, GPT-3, T5, Retrieval-Augmented Generation (RAG).

- Multimodal Knowledge Graphs for Language Understanding

Explanation:

- For efficient language interpretation, it is beneficial to develop knowledge graphs in such a manner that contains the ability to integrate visual and textual data.

- Graph neural networks (GNNs) have to be combined for graph-related interpretation.

Major Characteristics:

- Provides multimodal graph depictions of entities and connections.

- For story generation and question answering, graph-based reasoning is appropriate.

Research Queries:

- How can visual and textual information be efficiently integrated in knowledge graphs?

- What influence do graph neural networks contain on multimodal question answering?

Tools and Approaches:

- DGL (Deep Graph Library), OpenCV, PyTorch Geometric, Graph Neural Networks (GNNs).

- Explainable AI for NLP (XAI)

Explanation:

- To understand complicated NLP frameworks, aim to construct advanced explanation techniques.

- Mainly, for understandability, it is appreciable to investigate counterfactual analysis and attention mechanisms.

Major Characteristics:

- LIME or SHAP has to be employed for post-hoc understandability.

- For transformer frameworks, attention visualization is efficient.

Research Queries:

- How can counterfactual descriptions offer in-depth perceptions into NLP systems?

- What are the unfairness in recent explainability approaches?

Tools and Approaches:

- SHAP, LIME, Attention Visualization, ELI5 (Explain Like I’m Five).

- Ethical NLP and Fairness Detection

Explanation:

- To assure objectivity and extensiveness, aim to detect and reduce unfairness in NLP frameworks.

- For identifying and decreasing unfairness, it is advisable to construct a systematic model.

Major Characteristics:

- Through the utilization of WEAT (Word Embedding Association Test), carry out bias identification.

- Typically, counterfactual data augmentation and adversarial training techniques are included in this study.

Research Queries:

- How to construct extensive parameters for bias identification in NLP frameworks?

- What policies are more efficient in decreasing unfairness among demographic forums?

Tools and Approaches:

- WEAT, Allennlp Interpret, Fairlearn, AI Fairness 360.

- Open-Domain Question Answering with Multimodal Contexts

Explanation:

- An open-domain QA model has to be developed in such a way that answers queries by employing text as well as images.

- To combine numerous resources, focus on constructing a retrieval-based model.

Major Characteristics:

- For efficient answer generation, multimodal context retrieval is suitable.

- This research contains the capability to combine web-based search outcomes and images.

Research Queries:

- How can text and image settings be integrated to enhance answer precision?

- What recovery policies better manage multimodal settings?

Tools and Approaches:

- Haystack, VisualBERT, Hugging Face Transformers, OpenAI GPT-3 API.

- Few-Shot and Zero-Shot Learning in NLP

Explanation:

- It is approachable to create NLP systems that are able to generalize to novel missions by means of some or zero tagged instances.

- Focus on utilizing extensive pre-trained language systems such as GPT-3 and T5.

Major Characteristics:

- For enhanced mission generalization, meta-learning and prompt engineering are efficient.

- Contrastive learning is appropriate and beneficial to enhance few-shot adaptation.

Research Queries:

- In what way can meta-learning enhance zero-shot mission generalization?

- What prompts or mission explanations produce best few-shot learning outcomes?

Tools and Approaches:

- Hugging Face Transformers, Meta-Learning Libraries, GPT-3, T5.

- Cross-Lingual Transfer Learning for Low-Resource Languages

Explanation:

- To carry out NLP missions in low-resource languages, aim to create cross-lingual frameworks.

- It is approachable to utilize multilingual pre-trained systems such as mBERT and XLM-R.

Major Characteristics:

- For cross-lingual missions, zero-shot and few-shot learning techniques are effective.

- This study performs pretraining on synthetic parallel corpora.

Research Queries:

- How can synthetic parallel corpora enhance cross-lingual transfer learning?

- What policies perform better for domain adaptation in low-resource languages?

Tools and Approaches:

- mBERT, Hugging Face Transformers, XLM-R, IndicNLP.

What is a good master thesis topic in NLP sentiment analysis in 2025?

In NLP sentiment analysis, numerous thesis topics are emerging frequently. But some are determined as best and suitable for a master thesis. We suggest few hopeful concepts:

- Aspect-Based Sentiment Analysis (ABSA) with Few-Shot Learning

Explanation:

- A sentiment analysis has to be constructed in such a manner that detects the sentiment towards certain factors such as camera quality, battery lifespan by means of least tagged data.

- To manage novel fields or factors, aim to implement few-shot learning approaches.

Major characteristics:

- This study provides few-shot learning along with meta-learning or contrastive learning.

- Through the utilization of cross-lingual frameworks such as XLM-R, perform multilingual aspect extraction.

Research Queries:

- How efficient are few-shot learning approaches in enhancing cross-domain ABSA?

- What prompts or mission explanations produce best effectiveness in few-shot learning?

Possible Datasets:

- Amazon Reviews (Multi-Domain)

- SemEval-2016 Task 5 (Restaurant Reviews)

Tools and Approaches:

- Prototypical Networks, XLM-R, Hugging Face Transformers (T5, GPT-3).

- Sentiment Analysis with Multimodal Learning in Social Media Posts

Explanation:

- By employing multimodal learning, focus on examining sentiment in social media posts encompassing text as well as images.

- It is appreciable to integrate textual characteristics from transformer frameworks together with visual characteristics from convolutional neural networks (CNNs).

Major characteristics:

- In order to coordinate text and image characteristics, the joint attention mechanism is determined as efficient.

- Provides multimodal categorization with transformer-related systems.

Research Queries:

- In what way does the combination of textual and visual characteristics enhance sentiment analysis precision?

- What attention mechanisms are more efficient for multimodal learning?

Possible Datasets:

- Flickr30k, MS COCO along with captions

- Twitter Multimodal Sentiment Analysis (TMMS)

Tools and Approaches:

- VisualBERT, OpenCV, MMF (Facebook AI), LXMERT.

- Explainable Sentiment Analysis Using Transformer Models

Explanation:

- A sentiment analysis framework has to be built to offer descriptions for its forecasting through the utilization of transformer models.

- To understand framework forecasting, deploy counterfactual explanations and attention visualization.

Major characteristics:

- For explainability, attention visualization is efficient.

- Utilizing contrastive learning or adversarial examples, carry out counterfactual explanations.

Research Queries:

- In what way can counterfactual explanations enhance the understandability of sentiment analysis frameworks?

- What unfairness exists in attention-related descriptions?

Possible Datasets:

- Yelp Reviews, IMDb Reviews, SST-2

Tools and Approaches:

- Transformers (BERT, RoBERTa), ELI5, LIME, SHAP.

- Bias Detection and Mitigation in Sentiment Analysis Models

Explanation:

- In sentiment analysis systems, research the existing unfairness and focus on creating approaches to reduce them.

- Aim to investigate domain unfairness like industries, topics, and demographic unfairness such as race, gender.

Major characteristics:

- For bias identification, Word Embedding Association Test (WEAT) is efficient.

- Data augmentation and adversarial training are suitable for bias reduction.

Research Queries:

- How do demographic and domain unfairness impact sentiment analysis frameworks?

- What policies are efficient in reducing unfairness in transformer-related frameworks?

Possible Datasets:

- Twitter Sentiment Analysis (TSA), IMDb Reviews, SST-2

Tools and Approaches:

- Hugging Face Transformers, WEAT, Fairlearn.

- Sentiment Analysis for Financial Market Prediction

Explanation:

- To forecast stock market patterns, investigate sentiment in social media posts or financial news.

- Through the utilization of historical stock data, deploy sentiment-related trading policies.

Major characteristics:

- It encompasses time-series analysis of financial data and sentiment scores.

- On financial text, transformer frameworks are optimized by employing sentiment categorization.

Research Queries:

- In what way can sentiment analysis enhance the forecasting of financial market patterns?

- What trading policies gain more advantage from sentiment-related forecasting?

Possible Datasets:

- Stock Market Data (S&P 500, NASDAQ)

- Financial PhraseBank, Yahoo Finance News

Tools and Approaches:

- T5, Scikit-learn, FinBERT, Prophet

- Cross-Lingual Sentiment Analysis for Low-Resource Languages

Explanation:

- Focus on constructing a sentiment analysis framework that has the capacity to perform among numerous languages by means of constrained tagged data.

- It is better to make use of multilingual pre-trained systems such as XLM-R and implement cross-lingual transfer learning.

Major characteristics:

- By means of zero-shot or few-shot learning, this study performs cross-lingual sentiment analysis.

- It provides transfer learning and domain adaptation approaches.

Research Queries:

- How can cross-lingual transfer learning enhance sentiment analysis in low-resource languages?

- What translation unfairness is present in cross-lingual sentiment analysis frameworks?

Possible Datasets:

- Twitter Sentiment Analysis (TSA), Multilingual Amazon Reviews

Tools and Approaches:

- mBERT, IndicNLP, XLM-R, Hugging Face Transformers.

- Sentiment Analysis for Conversational AI Systems

Explanation:

- To improve the understanding and reactions of conversational AI models, utilize a sentiment analysis model.

- In psychological health chatbots or consumer support chatbots, implement sentiment identification.

Major characteristics:

- In conversation management, implement actual-time sentiment identification.

- For enhanced user involvement, it includes sentiment-related conversation flow.

Research Queries:

- In what way can actual-time sentiment identification enhance user fulfillment in conversational AI?

- What policies improve the understanding of sentiment-related conversation flows?

Possible Datasets:

- Conversational Sentiment Analysis Dataset (CSAD), DailyDialog

Tools and Approaches:

- GPT-3, Rasa, T5, DialoGPT.

Natural Language Processing Topics

The Natural Language Processing Topics mentioned below have been recently explored by the experts at phdprime.com. Our primary focus is on innovation, so feel free to share your research concerns with us. We will provide you with the best ideas in your respective field.

- Neural finite-state transducers: a bottom-up approach to natural language processing

- Audio to Sign Language conversion using Natural Language Processing

- Motion recognition by natural language including success and failure of tasks for co-working robot with human

- Natural Language Processing and Bayesian Networks for the Analysis of Process Safety Events

- Customer data extraction techniques based on natural language processing for e-commerce business analytics

- Analyzation of Sentiments of Product Reviews using Natural Language Processing

- Design and Implementation of System of the Web Vulnerability Detection Based on Crawler and Natural Language Processing

- Platform of the Web vulnerability detection Based on Natural Language Processing

- A review on applied Natural Language Processing to Electronic Health Records

- A Framework for Early Detection of Cyberbullying in Chinese-English Code-Mixed Social Media Text Using Natural Language Processing and Machine Learning

- A critical survey on the use of Fuzzy Sets in Speech and Natural Language Processing

- An Introduction of Deep Learning Based Word Representation Applied to Natural Language Processing

- A Survey of Natural Language Processing Implementation for Data Query Systems

- Automatic Indonesia’s questions classification based on bloom’s taxonomy using Natural Language Processing a preliminary study

- Enhancing Speech Based Information Service with Natural Language Processing

- Comparative Question Answering System based on Natural Language Processing and Machine Learning

- Automated Genre Classification of Books Using Machine Learning and Natural Language Processing

- Twitter Spam Detection Using Natural Language Processing by Encoder Decoder Model

- A Toolkit for Text Extraction and Analysis for Natural Language Processing Tasks

- A review of the application of natural language processing in clinical medicine